How can generative AI be used in the best way while mitigating its risks of “hallucinations”? Researchers provide an answer through a model that crosses the ability to verify AI-generated information with the importance of the information’s accuracy. This is useful in the era of rapid expansion of ChatGPT and similar technologies.

“Botshit.” The expression is not the most elegant, but it has the merit of being clear. Mentioned in this article from the Guardian by Professor André Spicer of Bayes Business School in London, it roughly corresponds to the ability to say anything, not from humans (“bullshit”) but from machines! This phenomenon is obviously related to the spectacular emergence of generative artificial intelligence (AI) over the past year.

Let’s remember that these models are probabilistic. A generative AI answers questions based on statistical suppositions. It does not seek truth or falsehood (it is not capable of knowing that) but the most probable. Often, its answers are correct. But sometimes, this is not the case – despite their apparent coherence. This is what we call “hallucinations.”

“When humans use this misleading content for tasks, it becomes what we call ‘botshit’,” says André Spicer.

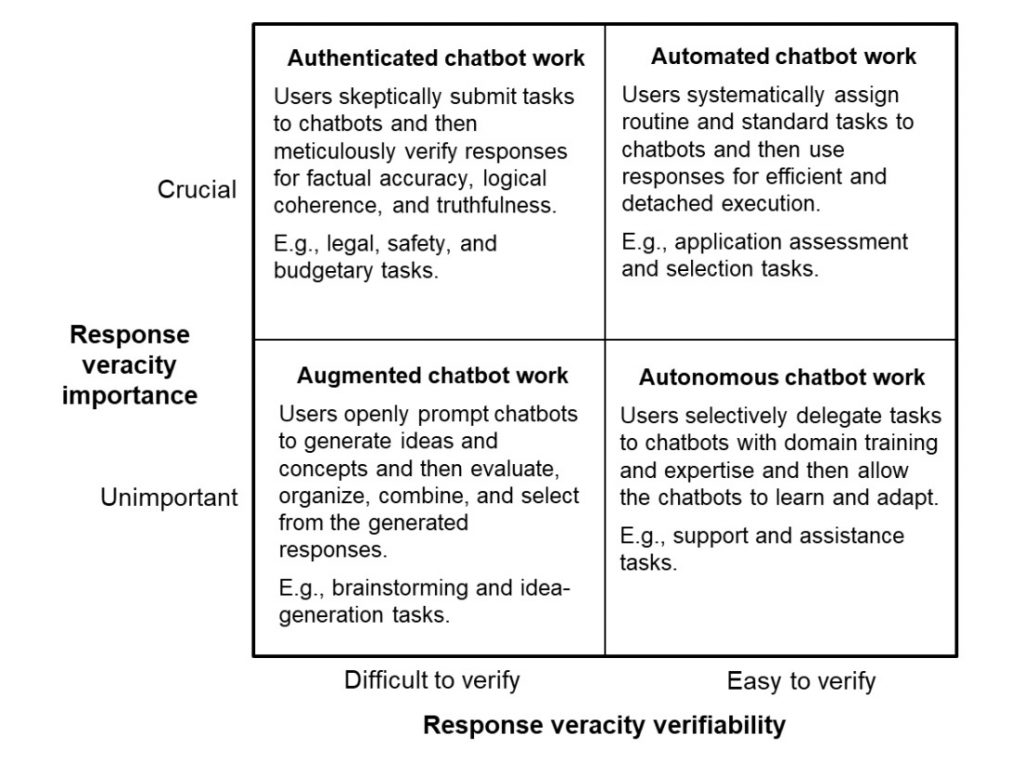

Based on this observation, the researcher, along with two of his colleagues, proposes in an academic article published a few months ago to identify the use cases of generative artificial intelligence to mitigate these risks. The result is a two-dimensional model:

- The ability to verify the truthfulness of the answers

- The importance of the truthfulness of the answers

As a result, there are four different situations of collaboration between humans and generative AI.

- “Autonomous” Mode – Easily Verifiable Task and Low Stakes

In this first mode, the user can delegate specific tasks to the machine by quickly verifying them, which is likely to increase productivity and eliminate routine tasks. The authors cite common customer requests or the processing of routine administrative requests as examples.

- “Augmented” Mode – Hard-to-Verify Task and Low Stakes

In this case, the user should use the AI-generated response in an augmented manner, meaning they should use it to “augment” their human capabilities. This mode is particularly suited for creative brainstorming and generating new ideas. The responses are not fully usable as they are, but when sorted, modified, and reworked, they can help increase productivity and creativity.

- “Automate” Mode – Easily Verifiable Task and High Stakes

Since the accuracy of the information is crucial here, users must verify the AI’s responses. They assign simple, routine, and relatively standardized tasks to the AI. The authors mention quality control as an example, such as analyzing and pre-approving loan applications in a bank.

“Large amounts of information could be used to verify the statements made. However, the decision will likely be relatively crucial and high-stakes. This means that even if the chatbot can automatically produce a truthful statement, it must still be verified and approved by a trained banker,” they indicate.

- “Authenticated” Mode – Hard-to-Verify Task and High Stakes

In this last and most problematic case, users must establish safeguards and maintain critical thinking and reasoning abilities to assess the truthfulness of the AI’s responses.

“In these contexts, users must structure their engagement with the chatbot’s response and adapt the responses as (un)certainties reveal themselves,” explain the authors.

An example? An investment decision in a new industry with little precise information – making it difficult to verify the AI’s responses in this matter. Robots can highlight overlooked details or problems, adding depth and rigor to critical decisions, especially in uncertain or ambiguous situations.

A very easy-to-apply model in interactions with AI!